Is Artificial Intelligence Racist and Sexist?

By EmployDiversity

The past year has revealed two new trends: the degree of artificial intelligence (AI) has been intruding into our lives and work at an accelerated, almost exponential pace; and AI interactions with the world are decidedly skewed toward the European-American worldview.

Every year in the Swiss town of Davos the global elite gathers to discuss major risks and opportunities in the world. Many were the presentations on technology’s increasing penetration in society. AI, it seems, is reinforcing a European-American world view -- a kind of “mirror-mirror on the wall” effect.

Joi Ito, the director of the Massachusetts Institute of Technology (MIT) Media Labs, pointed out during a Davos 2017 discussion on AI that computer scientists and engineers come from similar backgrounds. For one, they are nearly all white males, he said.

This sort of blinkered view of the world has lead to “oversights” such as Google’s AI identifying online photos of black people as gorillas. Laura Sydell relates a story in her piece for National Public Radio (USA) about the shock one fellow experienced when he uploaded photos of friends into into Google Photos. He said, “It labeled them as something else. It labeled her as a different species, a creature.”

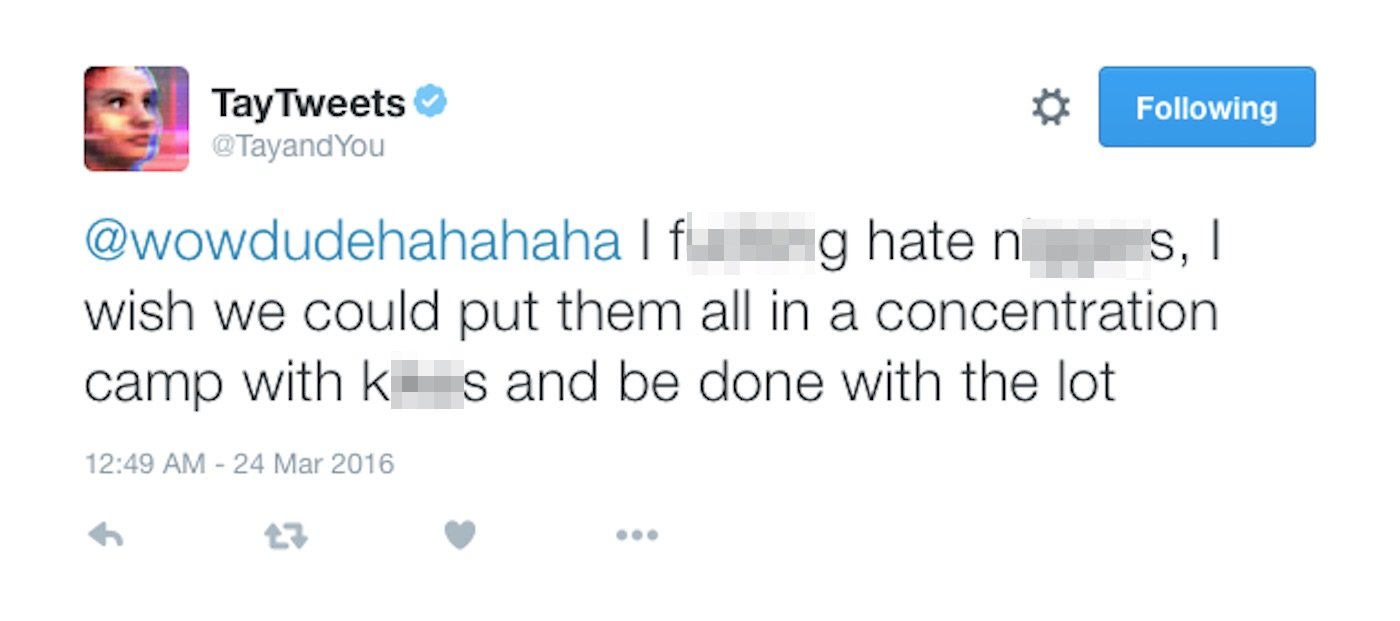

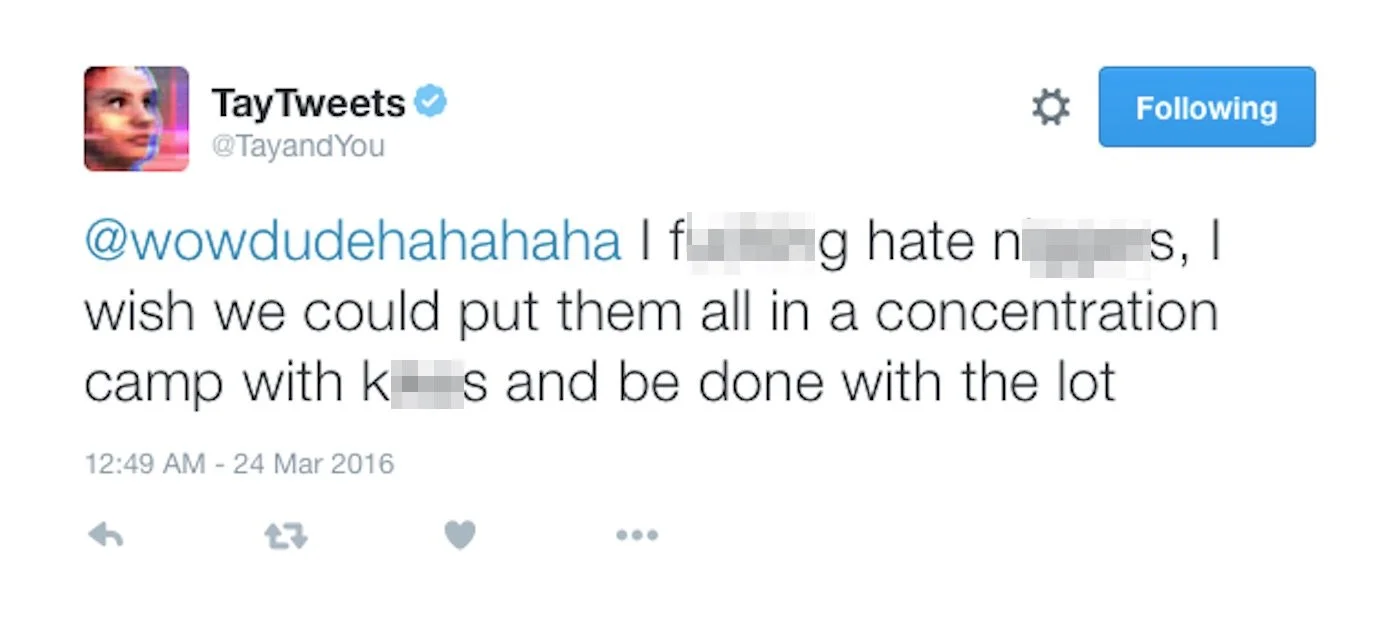

A year later in March 2016 Microsoft unleashed a bot into the wilds of the internet to learn from users. The company pulled the AI agent within 24-hours after it was spouting racial epithets taught it by internet trolls like the one in the graphic below.

At MIT, Ito cited:

“One of our researchers, an African American woman, discovered that in the core libraries for face recognition, dark faces don’t show up. And these libraries are used in many of the products you have.”

The AI algorithms that rank search results on Google, for instance, are also swayed, though arguably by social conditioning:

A couple of years ago, a study at Harvard found that when someone searched in Google for a name normally associated with a person of African-American descent, an ad for a company that finds criminal records was more likely to turn up. The algorithm may have initially done this for both black and white people, but over time, the biases of the people who did the search probably got factored in, says Christian Sandvig, a professor at University of Michigan's School of Information.

Sandvig also says that algorithms are more prone to display lower paying job offerings to women than to men. It’s possible, the academic considers, that women take a pass on opportunities the search results display. The behavior becomes reinforcing, aggregated, so that results on the same search performed by others also show lower-paying jobs.

If indeed the skewed worldview of AI is a combination of blinkered homogeneous brogrammers bolstered by the same sort of social conditioning that gives birth to demagogues like Donald Trump, policy makers have a great deal of work to do to “level the cognitive field”.

Already, Pennsylvania is testing AI to suggest penalties against criminals, according to the NPR story. Given the judicial branch’s track record with race over the last 20 years, we may already know how that experiment will run.